Envoy – Data Plane

Envoy, originally built by Lyft, is a high performing proxy used to watch all traffic for all services in the service mesh.

- Within a service mesh, each application endpoint (whether a container, a pod, or a host, and however these are set up in your deployments) is configured to route traffic to a local proxy (installed as a sidecar container, for example).

- That local proxy exposes primitives that can be used to manage things like retry logic, encryption mechanisms, custom routing rules, service discovery, health checking, load balancing, authentication/authorization, and observability and more. A collection of those proxies form a “mesh” of services that now share common network traffic management properties. Those proxies can be controlled from a centralized control plane where operators can compose a policy that affects the behavior of the entire mesh.

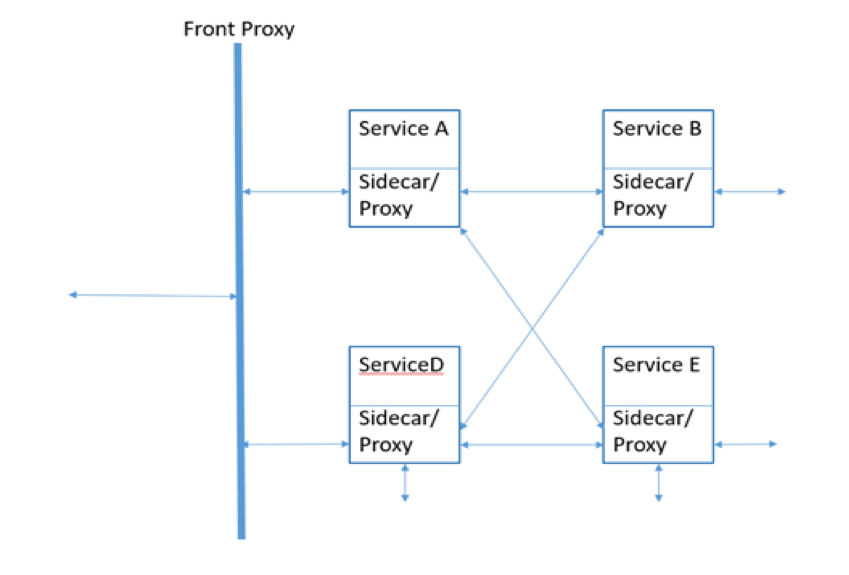

- Envoy will be configured as that local proxy, either as Sidecar or Front Proxy or both in a Service Mesh.

- It can be configured as Sidecar – for Service-to-Service – East/West traffic – “east-west” traffic refers to traffic within a data center — i.e. server to server traffic.

- It can be configured as Front Proxy – server-to-client- North/South traffic – “North-south” traffic is a client to server traffic, between the data center and the rest of the network (anything outside the data center)

Envoy as a Front Proxy

Front Proxy is like the single source of entry or exit for the traffic flowing in and out between service mesh and outer world or client side or north-south traffic.

Let’s see how it can be deployed on the docker as a front proxy.

- Docker-compose.yml

version: '3'

services:

front-envoy2:

build:

context: .

dockerfile: Dockerfile-frontenvoy

volumes:

- ./front_proxy.yaml:/etc/front_proxy.yaml

networks:

- envoymesh

expose:

- "8002"

- "8301"

ports:

- "8002:8002"

- "9001:8301"

Firstly, need to define dockerfile for deploying envoy, then a volumes which have a configuration that makes it front envoy, and then need to expose the ports of the configuration yaml file and map the container port to the host port for accessibility from outside the container.

Following are some of the other files:

- Dockerfile-frontenvoy

FROM envoyproxy/envoy:latest RUN apt-get update && apt-get -q install -y \curl CMD /usr/local/bin/envoy -c /etc/front_proxy.yaml --service-cluster front-proxy2

- front_proxy.yaml

admin:

access_log_path: "/dev/null"

address:

socket_address:

address: 0.0.0.0

port_value: 8002

static_resources:

listeners:

- address:

socket_address:

address: 0.0.0.0

port_value: 8301

filter_chains:

- filters:

- name: envoy.http_connection_manager

config:

codec_type: auto

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

- name: backend

domains:

- "*"

routes:

- match:

prefix: "/api"

route:

cluster: dotnetservice

http_filters:

- name: envoy.router

config: {}

clusters:

- name: dotnetservice

connect_timeout: 0.25s

type: strict_dns

lb_policy: round_robin

dns_lookup_family: V4_ONLY

hosts:

- socket_address:

address: service1

port_value: 80

- The ports mentioned here are the ones we mapped to the host(outer) ports.

- Routes are the tag where we map the prefixes of the endpoints of the services, mapping to which we have a cluster which has all the rules to be applied.

- The prefix mentioned in the route is the one we hit from the outside or say from the client and the address mentioned in the cluster is the address of the actual service.

- Clusters tag is the one where we need to mention all the configuration or rules we want to apply for the particular service we mentioned in the socket address of the cluster. For Example, timeout, retries, load balancing, etc.

- This also provides a layer of security, the client can not call the service directly, but only through the front proxy which has rules configured for the service.

Envoy as Sidecar

On similar lines, we need to deploy our sidecar and map the ports. Note: We do not need to map the ports of the service deployed with the host ports, If we do so the service directly will be accessible without the sidecar. Since Sidecar and Service will be in the same container, sidecar will be able to talk to service and no one else.

Let’s see how to deploy service and sidecar in docker:

- Docker Compose File:

version: '3'

services:

sidecar:

build:

context: .

dockerfile: Dockerfile-serviceEnvoy

volumes:

- ./service_proxy.yaml:/etc/service_proxy.yaml

networks:

envoymesh:

aliases:

- sidecar

expose:

- "8005"

- "8201"

ports:

- "8005:8005"

service1:

image: webapp1:latest

networks:

envoymesh:

aliases:

- service1

environment:

- SERVICE_NAME=1

expose:

- "80"

networks:

envoymesh: {}

If you look carefully, the difference is our front proxy will not go to service directly but will go through sidecar. So all the traffic in and out either from the front proxy(North-south) or from other services(East-West) will be through sidecar.

In the above picture, the Left side is an only front proxy and Right side is with front proxy and sidecar.

So now the cluster in the front proxy will have the address of the sidecar, and sidecar will only be responsible for the particular service and will have the address of that service. In the Sidecar, we can configure more specific endpoints of the service rather than a common prefix. The sidecar again adds one more layer of the security and rules for the service.

Let’s have a look at the Front Proxy and Sidecar Configuration

For any queries, feel free to reach out to me. Stay tuned for the next Blog Post!